Stay Up to Date

Submit your email address to receive the latest industry and Aerospace America news.

The CFD Vision 2030 Integration Committee advocates for, inspires and enables community activities recommended by the vision study for revolutionary advances in the state-of-the-art of computational technologies needed for analysis, design and certification of future aerospace systems.

Three grand challenge problems crafted by the committee — low-speed, high-lift aerodynamics predictions; full engine turbofan unsteady simulations; and computational fluid dynamics-in-the-loop Monte Carlo simulation for space vehicle design — were reexamined this year and refined to focus on achieving the challenge goals, when possible, or determining where additional resources are required to achieve them. These challenges focus development work on complex real-world issues that exercise all elements of the original 2030 Vision Roadmap. A key goal of this effort is to energize the aerospace computational aerodynamics communities by collaborating across technology providers to accelerate the use of efficient and robust computational tools to ultimately create products with increased aerodynamic performance that are environmentally cleaner and more fuel efficient and ensure safe flight while reducing nonrecurring product development cost and risk.

Progress continued this year toward meeting the Roadmap milestones in high-performance computing, physical modeling and robust meshing of complex geometries. Researchers at NASA’s Langley Research Center in Virginia ported the generic gas version of the FUN3D flow solver to run on graphics processing units, and in January they reported over a 10x reduction in time to solution. FUN3D developers spent most of this year making improvements and applying them to supersonic retro propulsion problems and Multi-Purpose Crew Vehicle abort simulations and database development.

Physical modeling improvements in wall modeled large eddy simulation and demonstrations on complex configurations were reported by multiple groups. Researchers at Stanford and the University of Pennsylvania showed results in August indicating that wall modeled large eddy methods can be applied to flows to highly 3D boundary layers, but some errors are introduced if a simple 2D based wall model was used. Hypersonics researchers at the University of Minnesota and Texas A&M took on the challenge of applying wall modeled large eddy simulations to complex geometries and high-speed flows and used direct numerical simulation data to assess the accuracy of these models.

The year brought evolutionary progress and maturation of methods in mesh generation. Improvements in the MIT and Syracuse University Engineering Sketch Pad software and NASA’s refine code have brought designers closer to the goal of “automatic generation of mesh on complex geometry on first attempt.”

In August, Langley demonstrated automated meshing and solution of a supersonic Space Launch System configuration with support hardware in a detailed wind tunnel test section with only minor user intervention to repair the input geometry. A detailed review by the Roadmap subcommittee showed that while there was progress on key technology demonstrations, many of the original milestones were behind schedule. The Roadmap subcommittee recommended some minor updates including moving the HPC milestone “Demonstrate efficiently scaled CFD simulation capability on an exascale system” out one year, due to the current state of the art, and some of the multidisciplinary optimization milestones have been delayed by two years based on the state of the art.

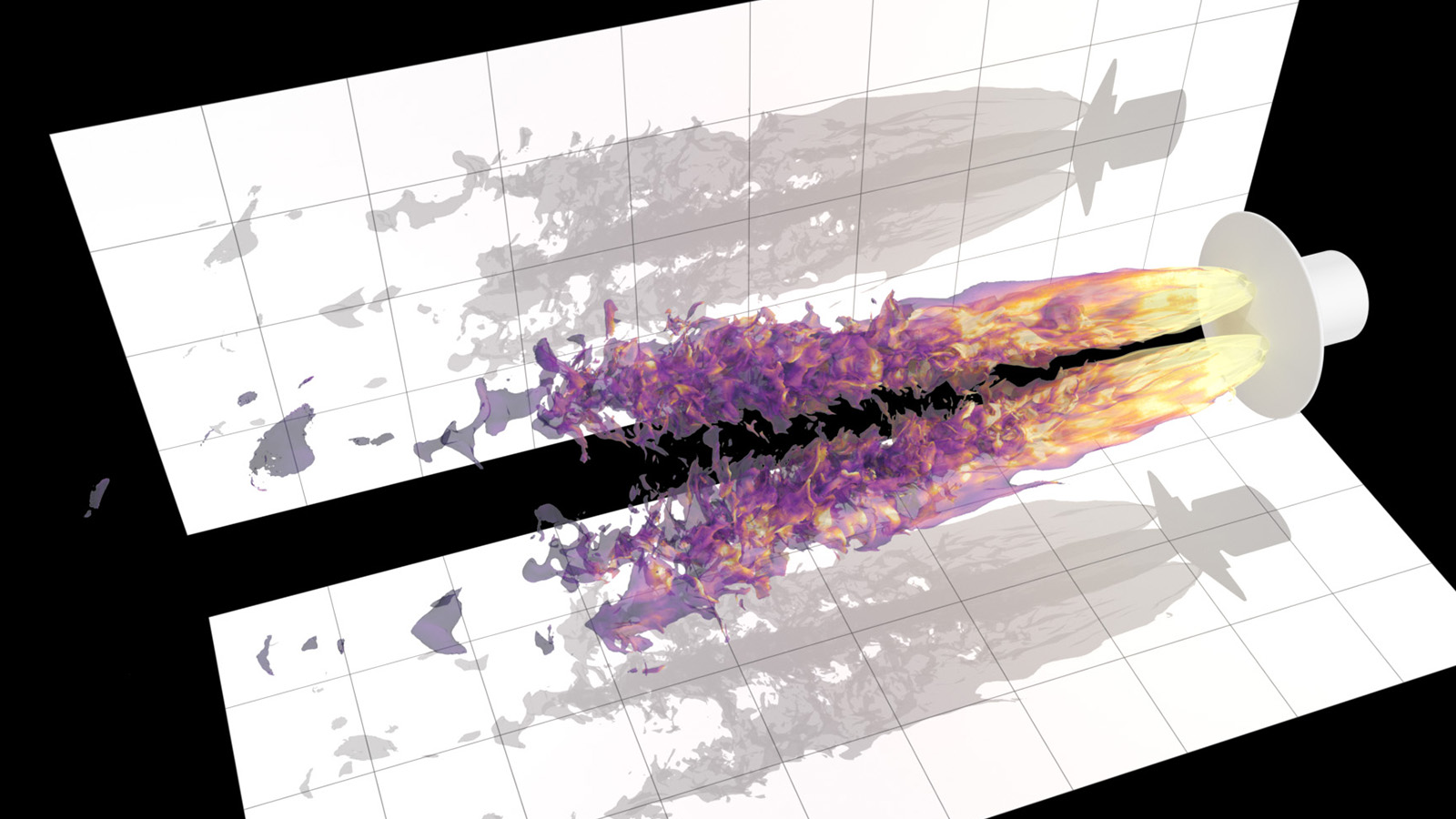

Efforts continued throughout the year toward the CFD Vision 2030 and 2024 NASA Transformational Tools and Technologies milestone to demonstrate an exascale solution capability, or throughput of approximately 1018 floating point operations per second. The end goal is to reduce analysis and design times by multiple orders of magnitude. For instance, an international team of 20 researchers continued applying the FUN3D CFD solver to investigate aero-propulsive effects for human-scale Mars entry. The researchers from NASA, Kord Technologies, the National Institute of Aerospace, NVIDIA in Berlin and Old Dominion University used computational time awarded in November 2020 by the U.S. Department of Energy. This year’s award consisted of 770,000 node-hours of computational time on the Summit computing facility, the world’s most powerful GPU-based supercomputer. The allocation is roughly equivalent to 1 billion central processing unit core-hours. The team extended its prior 2019 work by performing 10-species reacting-gas detached eddy simulations of oxygen and methane combustion along with the Martian CO2 atmosphere using meshes of billions of elements, routinely running on 16,000 GPUs, or the equivalent of several million CPU cores.

Stay Up to Date

Submit your email address to receive the latest industry and Aerospace America news.