Stay Up to Date

Submit your email address to receive the latest industry and Aerospace America news.

Infrared cameras provide better vision

By the end of the year, the FAA is expected to issue a rule that will permit passenger planes equipped with certain kinds of infrared and other cameras to operate much more freely in low-visibility conditions. The new rule could provide substantial economic incentives for commercial and other aircraft to adopt a technology that has been installed on relatively few aircraft, mostly high-end business jets and some express-cargo jets.

It’s been a long road getting to this point.

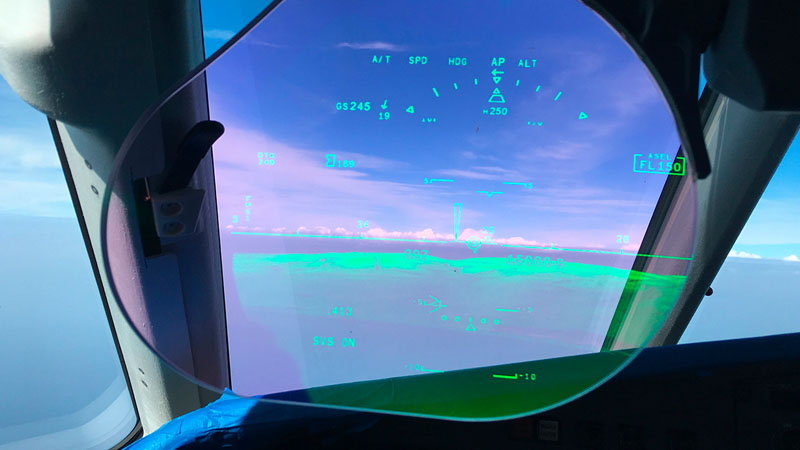

In 2013, the FAA issued a notice of proposed rule-making saying it intended to allow more operational freedom in bad weather for aircraft — including airline passenger planes — with adequate enhanced flight vision systems. In the cockpit, infrared and other images will be projected onto a transparent screen, called a head-up display, or HUD. Large passenger aircraft with the systems would be allowed to take off, head toward and land at airports where they’d previously been barred during times of low visibility caused by weather. Overall, the change should mean fewer delays, cancellations and re-routings.

To justify installing enhanced vision systems, developers realized they’d need to focus on cost so that the airlines would judge the technology affordable. That meant minimizing the cost of the equipment as well as its installation and maintenance. Downtime would have to be acceptable, as would the size, weight and power needed by the enhanced-vision equipment. All this would have to be done even during the shift to new airfield lighting technology that complicates matters for cameras.

Elbit Systems, Honeywell and Rockwell Collins led the way on enhanced vision — in Elbit’s case, by applying technology originally developed for defense applications. In 2013, Rockwell decided to develop a new enhanced-vision system in hopes of increasing performance over an earlier iteration while also restraining life-cycle costs to make installation attractive to airlines and other operators.

Improving an existing system

Rockwell’s Carlo Tiana, a principal system engineer, says the company capitalized on improvements in sensing materials and processing power and made wise technology and design choices. Key was dealing with the change in airfield ground lighting with some clever programming to make it work.

Rockwell began installing simple versions of HUDs in airline cockpits in 1985 to display basic information, such as flight paths, speed and altitude. In 2004, it began adding systems made by other manufacturers to show enhanced-vision video on the HUD. These first-generation systems imaged the scene in front of an aircraft with a single, cooled, extended midwave infrared camera on the nose of the plane to sense wavelengths of 1 micron to 5 microns. Early systems required liquid nitrogen refrigeration to achieve very low focal plane temperatures for detection of small temperature differences.

That is the way infrared works: By detecting differences in the temperature of objects — either self-generated or retained from solar heat — it distinguishes different objects and surfaces. But cooling for sensitivity added weight, cost and reliability problems.

Early systems usually came in three parts. A camera with the sensor was recessed in the nose of the aircraft. The sensor looked through a window in the fuselage made of transparent sapphire stone, a material chosen for such applications because of its hardness. This window had to be large to provide sufficient field of view, and it had to be heated for de-icing. Sometimes, a separate processor converted sensor data into images for the HUD. In other cases, there was a separate de-icing heater control. This complexity added weight far forward of the aircraft’s center of gravity, took up premium space and decreased mean time between failures.

Rockwell wanted a better performing system that was simpler, lighter, smaller and more reliable.

According to Nate Kowash, Rockwell’s program manager and engineer for enhanced vision, cooling had been needed to achieve necessary performance. A mechanical cryo-cooler maintained a sensor’s focal plane array — the device that takes the image — at very low temperatures, as low as minus 196 Celsius (minus 321 Fahrenheit). The goal was maintaining the array at this temperature, but other components, such as its vacuum insulation vessel, also had to be cooled to achieve this, which caused additional inefficiency in cooling. But by 2013, Rockwell could buy customized, uncooled long-wave systems at one-fourth the cost of cooled sensors. Demand in military and law enforcement markets had prompted advances in uncooled infrared technology. And using a long-wave sensor and a short-wave sensor had always been preferable to using one midwave sensor.

Midwave infrared is good at detecting temperature differences in terrain, but it is not so accurate at detecting short-wave infrared radiation of 1 micron to 1.5 microns from incandescent airport approach lights. By 2013, high-performance uncooled short-wave sensors were available that were more sensitive in this band than cooled midwave sensors, and Rockwell decided to add one to see lights better.

Demanding applications

Enhanced-vision performance is measured in several ways. Noise-equivalent temperature difference describes the ability of thermal sensors to detect differences in temperature, which equates to an ability to distinguish more objects on terrain. Technologists who work on short-wave sensors refer to this as detectivity. By selecting an uncooled short-wave sensor with a focal plane made of the alloy indium gallium arsenide instead of indium antimonide, Rockwell improved detectivity by about 100 times over old systems that stretched midwave sensors to see shortwave radiation. Indium gallium arsenide at room temperature detects shortwave infrared much better than indium antimonide.

Another metric is dynamic range. At high altitudes, sensors must see very weak signals through many layers of atmosphere. Nearer to landing, sensors must not be blinded by extraordinarily bright airport lights.

“They must adapt to both tiny and immense signals,” Tiana explains.

The one-sensor systems had trouble with this challenge. But with two sensors for different tasks, Rockwell could optimize each for different degrees of brightness.

Another requirement is preventing blooming. Blooming occurs when light scatters on a wet window or in the atmosphere. This effect can be caused by a camera flash or oncoming high-beam headlights. Rockwell prevents blooming from wet surfaces optically by not focusing sensors on the window where moisture gathers. The system mitigates atmospheric blooming by processing images from the short-wave sensor.

As efforts proceeded, airports were changing infield lights (but not approach lights) to light-emitting diodes or LEDs. These require about 40 percent less power than incandescent bulbs and on average last much longer. But LEDs don’t get warm enough to be visible to infrared sensors, either short- or midwave. So Rockwell needed a visible-light sensor to see better than the human eye in low visibility. “It’s hard to beat the human eye,” Tiana notes.

Rockwell modified the optics of this visible-light sensor to improve imagery during day and night. It also added gain-control algorithms to process visible-light images and extract maximum performance in poor weather.

“Nonetheless,” Tiana acknowledges, “there are fundamental physics” that made the challenge hard. Seeing LED lights when they are blocked by fog, smoke or rain better than the human eye is difficult, but that is just what the new system had to do.

So three well-tailored sensors — long-wave, shortwave and visible light — dramatically improved performance. “The three current sensors each performs an essential function, and the suite detects all airfield features required for landing and taxiing,” Tiana explains.

Rockwell also had to make sure the system would not be barred from export under the U.S. International Traffic in Arms Regulations.

“The performance requirements for our application are very different from those of military applications,” Tiana says. “We customized our technologies to make sure they would provide maximum performance for our environment and not be useful for offensive battlefield applications.”

But three sensors presented a computing challenge, because different images would have to be fused into one view of the environment. Tiana describes that fusion as a major hurdle. The most visually beneficial portions of each sensor’s data had to be fused while excluding nonbeneficial elements. Fused video images also have to overlay the real world outside the cockpit exactly where they should be. And fusion must be done almost instantaneously. FAA certification requires no more than 100 milliseconds of latency from the moment a photon enters the sensor to the moment the corresponding photon exits the HUD toward the pilot’s eye.

Rockwell engineers tapped all the available literature on image merging. Still, they had to do a lot of customization to come up with fusion algorithms suited to the enhanced-vision problem. Sufficient original programming was done that Rockwell took out a couple dozen patents on its image-fusion software. The entire effort took several years.

The elaborate, rapid calculation for fusing images once would have required a supercomputer. Today, a much smaller processing device can handle it. That compactness was essential for the design of Rockwell’s new enhanced vision system, the EVS-3000.

Instead of the three-piece earlier system, Rockwell designed a single line replaceable unit to ease maintenance for cost-sensitive airlines. All processing is done in a processor embedded in the enhanced vision camera. Instead of creating a window in the fuselage for the camera, Rockwell designed the camera to sit on the nose of an aircraft. Video imagery passes through a fiber-optic cable to a computer and then to the HUD.

Putting the camera up front posed challenges. While the former window method required de-icing by heat-control units, it could be set flush with the fuselage, minimizing drag without affecting the optical train of the camera. In the new system, Rockwell had to minimize drag by jointly setting the optical train of the lenses and the window position. The simplicity of an integrated package and elimination of cooling cut costs, increased reliability and eased maintenance. Tiana estimates the new system has two to three times greater mean time between failure compared to the old, three-part, cooled system. It weighs a little less than 5 kilograms and requires a third less power at peak.

Rockwell completed the EVS-3000 in 2015, after two years of development. The company anticipates the FAA will soon certify the EVS-3000 on the Embraer Legacy 450 and 500. Embraer and other manufacturers are anxious for the FAA to issue the final rule for enhanced-vision operations. The 2013 draft would allow pilots in suitably equipped aircraft to continue descending below 100 feet in bad visibility. Aircraft with enhanced vision could take off under instrument flight rules even when visibility is less than that now prescribed for instrument approaches.

Views from other sectors

Rockwell hasn’t been the only company working on enhanced vision. Honeywell developed a system that projects images on head-down displays in the cockpit. And Elbit Systems of America was the first to certify enhanced vision and began installing a second-generation system — with a single cooled sensor to detect 1-5 micron wavelengths — on HUDs in FedEx Express MD10s and MD11s in 2008.

FedEx has since equipped about 270 aircraft with enhanced vision systems and is currently installing them on new 767 and 777 freighters upon delivery. Installation takes 12 days for each aircraft.

FedEx installed the systems partly because the company saw benefits in seeing in the dark during its many night landings and takeoffs. Passenger carriers take off and land mostly in daytime. Furthermore, the FAA allows properly equipped FedEx aircraft to use enhanced vision, rather than the pilot’s natural vision, to descend to 100 feet on landing approaches and to begin these approaches when natural visibility is as little as 1,000 feet. This is especially useful at small and midsize airports that do not have the most advanced instrument landing systems.

“There are improvements in situation awareness, energy management and basic flight path control,” says FedEx’s Dan Allen, managing director of flight operations and regulatory compliance. Other benefits include safer taxiing and better airport awareness in reduced visibility.

Elbit’s newer ClearVision enhanced vision system uses six uncooled sensors covering the full spectrum of energy between visible light and long-wave infrared.

“It’s a multispectral system,” explains Dror Yahav, Elbit vice president of commercial aviation. “Each has its own range depending on conditions and weather. For example, thick fog is different than smoke. Software fuses sensor images for the best picture.”

In developing image-fusion algorithms for commercial enhanced vision, Elbit applied its long experience with military enhanced vision. But Yahav emphasizes the civilian application is a “clean-sheet system with no military content.”

Yahav notes that Elbit enhanced vision is standard equipment on many of the latest Gulfstream business jets; has been certified on MD10s, MD11s, 757s 777s, 767s and A300s; and has been selected for the new COMAC C919.

Elbit has been working closely with the FAA on the new enhanced-vision rule. Yahav believes it will be a strong operational advantage for aircraft equipped with enhanced vision to descend to touchdown in bad weather beyond even the limits now allowed with the most advanced instrument landing systems. And many airports have much more basic instrument systems. ★

Related Posts

Stay Up to Date

Submit your email address to receive the latest industry and Aerospace America news.