Stay Up to Date

Submit your email address to receive the latest industry and Aerospace America news.

Touching down on the moon safely, accurately and autonomously will require highly capable cameras and software. Dominic Maggio and Brett Streetman of Draper take us inside the flight testing of their team’s landing system.

A spacecraft on a descent toward the lunar surface has a singular goal of landing in a location that’s free of boulders, craters and other hazards. For the coming wave of uncrewed landers destined for the lunar poles and far side, a safe landing is anything but guaranteed.

As space system engineers at Draper, we are keenly aware of how difficult it is to make it safely to the surface of the moon or a planet. So, we built and flight tested the Draper Multi-Environment Navigator, or DMEN, a computer, terrain analysis software and two video cameras that can be encased together in a shoebox-sized protective housing. Our end goal in this effort is to get DMEN ready for Draper’s CP-12 mission, which is one of the landers NASA has funded under its Commercial Lunar Payload Services program.

As a terrain relative navigation system, DMEN fits within NASA’s broad mandate from the early 2000s for members of the space community to develop an integrated suite of landing and hazard avoidance capabilities for planetary missions.

Flight tests can, of course, present their own challenges. We pushed a few boundaries and learned a few things when our DMEN system flew to an altitude of 8 kilometers on board the uncrewed Blue Origin New Shepard NS-23 mission last July and, three years earlier, on a World View Enterprises high-altitude balloon flight, which reached the Earth’s stratosphere at 33 kilometers. Both flight campaigns were made possible with funding from NASA’s Flight Opportunities office.

Neither New Shepard nor the balloon worked without challenges. New Shepard’s capsule escape system was triggered, ending our data collection (or so we thought at the time), although all of the NS-23 payloads landed safely near the Texas launch site. The balloon flight took place on a particularly windy day in Arizona, resulting in lots of debris and a spinning balloon. But we improved DMEN as a result of both missions, moving it closer to serving as the visual-inertial navigation method for Draper’s CP-12 mission.

The balloon flight

Draper, based in Cambridge, Massachusetts, earned legendary status in the 1960s for developing the Apollo guidance computer. In fact, we received the first major contract on Apollo, back when we were the MIT Instrumentation Lab and later the Charles Stark Draper Laboratory. Since then, Draper has contributed to the space shuttle program and International Space Station, and we are now contributing to the Artemis moon program due to our solid grounding in space systems. Such a heritage adds a dose of pressure in developing a terrain relative navigation system like DMEN.

Weighing just 3 kilograms, DMEN compares the images from its cameras to correlate the view to onboard maps and twice per second runs a multistep process to calculate the host spacecraft’s position and altitude during descent. DMEN crunches the data using software dubbed IBAL (pronounced like you think), short for Image-Based Absolute Localization. This system was our answer to NASA’s mandate.

In Arizona, we met up with a team from World View, who helped us put DMEN to the test. World View is a commercial stratospheric flight company selected by NASA to integrate and fly technology payloads to the boundary of space. In April 2019, World View launched DMEN with several unrelated payloads from Spaceport Tucson, the company’s primary launch location for high-altitude missions.

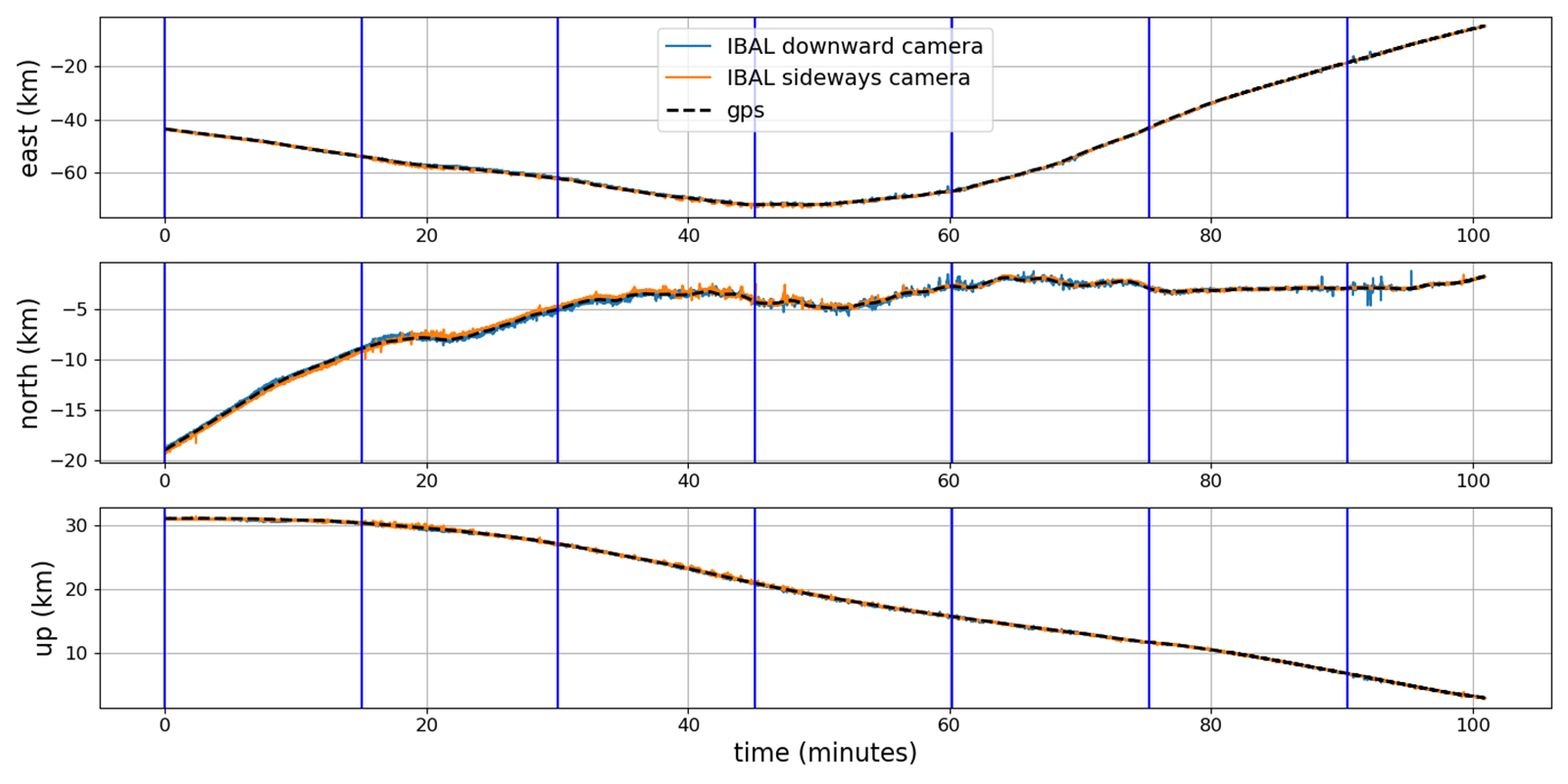

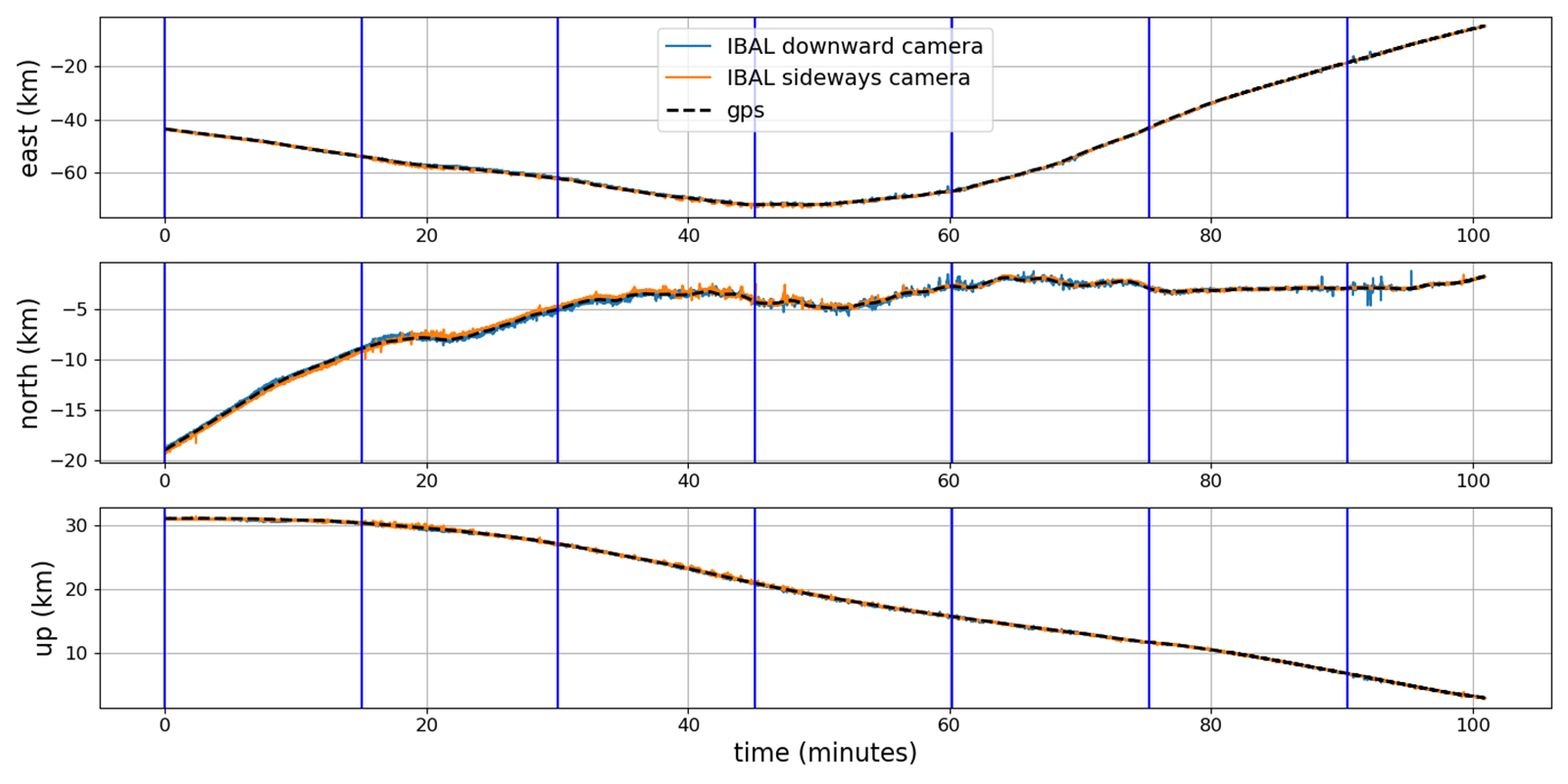

We watched as the balloon climbed to an altitude of 108,000 feet. DMEN gathered images at 20 hertz from its downward-facing camera and its sideways-tilted camera, which was angled at about 45 degrees. With the flight data, DMEN, in this test encased in its protective housing, compared the terrain features prominent in the camera images with a satellite image database of the area, pre-loaded to DMEN for this purpose. Potential matches were then passed to an optimization routine to both reject false matches and produce an optimal estimate of the vehicle’s pose. This is the process that took less than a second.

The Arizona flight had a few challenges for visual navigation. The balloon regularly experienced rapid rotations, in some cases over 20 degrees per second. Cords hanging below the balloon were visible to the downward-facing camera. Clouds blocked part of the terrain.

But challenges were exactly what we wanted to encounter in order to prove DMEN’s capabilities. During a real mission, DMEN and IBAL might need to overcome obscurants such as exhaust or ground debris kicked into the atmosphere during descent, which can interfere with a camera’s ability to track and compromise a safe descent and landing.

The balloon flight showed us that DMEN can accurately navigate a payload at a high altitude, using only imagery and inertial measurements. We also learned that DMEN could accommodate differences in camera angles, vehicle constraints and mission requirements.

The rocket flight

The balloon flight had proven DMEN could perform well at high altitudes and during rapid rotations, but to validate our system at high speeds, we needed a rocket, and we selected Blue Origin’s New Shepard.

We prepped our software and hardware at Draper here in Cambridge and then shipped it to Van Horn, Texas, for launch. The plan was to spend a couple of days mounting DMEN inside the capsule, including two cameras looking out the capsule windows.

IBAL’s database was automatically constructed by extracting image patches from U.S. Geological Survey satellite maps. Combined with elevation maps, each patch could be labeled with a known GPS location and elevation. As in the balloon flight, we used a single database created before flight.

At Van Horn, we inspected and tested the software to ensure it was ready for the mission. The prep work took place in the weeks leading up to launch — running test code and hardening our in-flight software. Just like for the balloon flight, we installed two cameras on the capsule in order to run IBAL separately with each camera to see the impacts of different angles and the terrain on the navigation solution.

During flight, the DMEN computer is designed to operate autonomously and determine on its own when to turn on, initiate its sensors, log the data and seal the data. As with the balloon mission, we wouldn’t know the results of NS-23 until we brought the unit, including its computer, back to Draper for processing.

As Blue prepared to launch New Shepard, the DMEN team gathered for a watch party at Draper. When the capsule escape system was triggered, those of us on site and at Draper gasped.

Suddenly, we faced uncertainty. We didn’t know how much data we would have to work with. We didn’t even know if our computer had logged anything. We would have to wait until the DMEN computer was returned for analysis.

Back at Draper, we unpacked DMEN’s flight computer from the case and connected it to our monitors to see if the data had been logged. This was the watch party most of us were actually worried about. Whatever the rocket does during launch is out of our control, but what’s on this computer tells us if we did our job correctly. Watching all the data appear exactly as it should became a moment of celebration.

With the data in hand, we next wanted to see if IBAL could use it to navigate. That brought the next victory. DMEN aced this flight test, with IBAL achieving average position error for the flight of less than 55 meters, despite reaching an altitude of 8 kilometers and speeds of up to 880 kilometers per hour.

Looking forward

The successful flight tests were a huge win for us, but we’re already looking ahead. Plans are underway to add a secondary and completely independent software to monitor IBAL’s performance during runtime, an enhancement that will leverage advances in machine learning and give the system additional redundancy. Future experiments with these datasets may give us new insights into the way perception methods can be advanced in systems like DMEN. Future versions could also be self-monitoring, operate without human supervision and better identify terrain using satellite imagery.

With these missions, we placed DMEN at a Technology Readiness Level of 5 on NASA’s 9-point scale, because it has been flown in a relevant environment. We collected data and validated algorithms in a suborbital environment, which is now helping Draper advance DMEN’s TRL toward Level 9, meaning flight in the intended operational environment of space in this case.

Software used in DMEN and IBAL is on course to be loaded and tested in Draper’s guidance, navigation and control simulation lab for the CP-12 mission. DMEN is well on its way as a candidate for human missions, including Artemis astronaut landings.

Dominic Maggio is an MIT graduate student and Draper Scholar whose research focuses on autonomous vehicles and related fields, including terrain relative navigation.

Brett Streetman is the principal investigator for the Draper Multi-Environment Navigator. At Draper, he has worked on guidance, navigation and control systems for the International Space Station, lunar landers, lunar exploration and satellites. He holds a Ph.D. in aerospace engineering from Cornell University.

Related Posts

Stay Up to Date

Submit your email address to receive the latest industry and Aerospace America news.