Applying AI to the right national security problems

July/August 2022

Leaders in the U.S. national security enterprise are intrigued by artificial intelligence. Capitalizing on this groundbreaking computing technology will require firsthand knowledge of needs and discipline in deciding which of those AI can meet. MITRE Corp.’s Eliahu “Eli” H. Niewood explains.

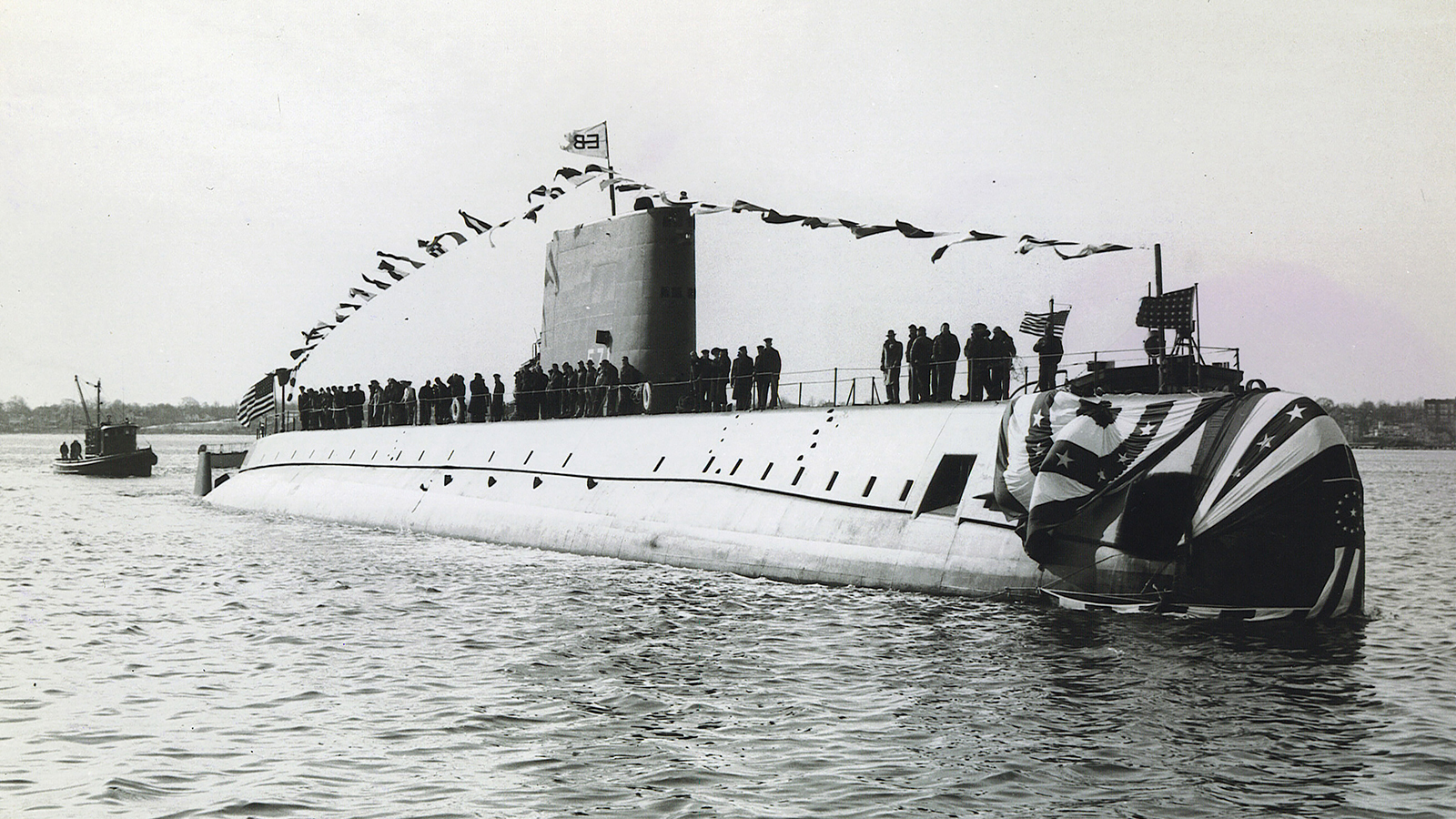

When I was 17, I met the father of the nuclear submarine, U.S. Navy Adm. Hyman Rickover, at a science fair I was participating in. When he stopped by my booth, I didn’t know who he was. He was at least six decades older than me and asking tough questions that I couldn’t answer. Only after he walked away did someone tell me about my brush with one of the greatest national security innovators in history.

Rickover is a great example of someone in national security who understood both state-of-the-art technology and mission need. Steeped in the intricacies of electrical engineering and having served on two submarines in the pre-World War II era, he approached the tremendous challenge of building a nuclear reactor in a sub with extensive firsthand knowledge, all with the ultimate goal of making our nation safer.

Like nuclear power in the 1950s, artificial intelligence could be transformational. Yet, many in the U.S. national security enterprise today bemoan the slow adoption of it by the U.S. Department of Defense and the intelligence community. Many of the challenges we face with AI are common with other technology areas that the department has been slow to operationalize compared to private industry.

Innovators in the consumer space are almost always solving a problem that they’ve experienced themselves or, at the very least, witnessed. The people who came up with coatings to allow ketchup to flow smoothly from a bottle were not alerted to the problem through a combatant commander’s integrated priority list. Rather, they probably ruined a tablecloth or two by hitting the bottom of the bottle too hard, and so they set out to solve the problem. Or consider car cupholders. I’d be willing to bet the designers of that innovation experienced the unique joy of hot coffee in their laps on a morning commute — or saw it happen to someone else.

Even in the commercial sector, where the problem space isn’t as universally understood as on the consumer side, many innovations come from people who have firsthand knowledge of the problems to be addressed. From the communications experts trying to solve the problems of maintaining signal to noise over long distances to the mechanical engineers who’ve spent thousands of hours on the machine shop floor, many in the commercial space have deep firsthand experience they can bring to bear.

By contrast, in the national security space, we start with significant separation between the people who have problems to solve and the people who understand and develop the technology to solve those problems. Then we increase that spacing through security classification and special access limitations — and then increase it a little more through contracting rules. It’s as though we’re seeking a solution to spilled coffee or an unwanted burst of ketchup by assigning someone who’s never been in a car or eaten a burger to issue a request for information seeking possible solutions.

Adding to the challenge, even the very warfighters for whom we are developing weapons and information systems likely won’t experience the need for them firsthand until they find themselves in a conflict. Command and control at the scale and complexity of what we will see in conflict with a peer nation probably can’t be replicated fully in any environment short of that conflict. Even a full-up exercise only comes close. And the challenges and cost of mounting that exercise mean it only happens a couple of times a year at best, so there’s little opportunity for experimentation.

So far, the national security enterprise has had some success at adopting commercial AI technology. The Defense Department and the intelligence community are making progress toward applying natural language processing, machine vision for electro-optical imagery and cyber monitoring of computer networks for malware and nefarious behavior. Yet the reality is that AI isn’t that different from other emerging technologies that have been slow to reach their full potential in national security while doing well in consumer and commercial spaces.

The problem isn’t ethical concerns around AI. Although intelligent weapons are new, we’ve had autonomous weapons since the first mine was set, since the first lock-on-after launch missile was fired — or for that matter, since the first camouflage was laid over a pit with spikes. And the problem isn’t that we don’t have data lakes with multilevel security filled with all the data in the world. AI isn’t going to magically make sense of random bits of data pulled from a cloud somewhere.

The problem is, in fact, the problems people expect AI to solve.

We don’t have enough people who bring together an understanding of AI with a firsthand or even secondhand knowledge of the operational problems we’d like to solve. Too many of our senior leaders and even operators have an unrealistic view of what AI can and can’t do. Too many of our AI experts are naïve about the real problems. I’ve seen too many briefings from leaders and operators saying “and then we will use AI to do X” when X isn’t feasible yet. I’ve worked with brilliant academics who’ve developed revolutionary AI capabilities, but don’t understand the true complexity of combat.

Three national security problems that AI might help us solve

So, if AI can’t “magically” solve every problem, the key is to identify those where it might realistically offer significant impact. From there, we can establish some lasting partnerships by matching academics and AI developers with the right clearances to warfighters and operators. We could then build modeling and simulation sandboxes where technologists can apply their AI algorithms to reasonable fidelity simulations that someone else builds for them — and that pass muster from those with firsthand experience. Following are a few examples of specific problems that are feasible to solve with AI but that the commercial sector won’t solve for us alone:

1. Creating courses of action for operational command and control

The U.S. National Defense Strategy recognizes that the joint force must be able to rapidly plan and execute operations simultaneously across all warfighting domains: land, sea, air, space and cyber. So the services and the intelligence community are working together to enable Joint All-Domain Command and Control (JADC2), a new battle command architecture for multidomain operations. But many of the conversations confuse development of resilient, cross-service communications systems (which would be an enabler for JADC2) with development of the actual sense-making and decision-making needed to advance the way we do command and control. While dumping enough data into a common data lake won’t allow AI to magically make sense of the world, AI is remarkably powerful at coming up with novel strategies for winning a variety of video and board games. We need to see if those same AI approaches could help us develop courses of action for operational-level decisions in conflict about how to use a set of sensors and weapons against a set of targets and tasks. Admittedly, as we try and bring capabilities from different domains and services together, the assignment problems get more complex and difficult computationally: These aren’t “games” where players take turns, there may be no way to measure the instantaneous value of a move, there’s no closed-form rule book to apply and the game board changes over time and from case to case. Nevertheless, I believe existing AI approaches could be adapted to help warfighters make better battlefield decisions faster than the adversary while maintaining human oversight and the commander’s intent.

2. Detecting anomalies

As we collect more and more data before crisis or conflict, we may be able to use AI techniques to

understand normal behavior by adversary military units and automatically alert operators if something appears different from the everyday norm. Military analysts do this today, but it can be labor intensive, lack timelines or be based on limited data. Human-machine teams may be the best choice for these applications.

3. Identifying and displaying the “right” data the right way

AI could help us understand what data or types of data different operators or different classes of operators might want to see and how that data should be presented to them. Again, if and when JADC2 becomes operational, the volume of data flowing to decision-makers is likely to grow significantly. It will be increasingly difficult for them to know what data to look at. For instance, a joint commander and maritime targeting cell operator may both need data from the same sources but otherwise have little overlap. And naturally operators will all respond differently to information displays. Perhaps automation schemes or AI could learn the preferences of individual operators or automatically configure workstations to their roles.

As with all national security innovations, when it comes to AI, we need to make the users and the mission problems they face front and center in our efforts to get emerging technology into the field. Rather than starting with the technology and bringing together technologists motivated by advancing the state of the art, we must identify the important problems that AI might realistically help us solve.

We can’t all be Adm. Rickover, both a technological visionary and warfighter. But we can match academics and AI developers with the right clearances to warfighters and operators — and pitch them against the right national security problems.

That’s how we can make our nation safer.

Eliahu “Eli” H. Niewood

became MITRE Corp.’s vice president of air and space forces in July after two years as vice president of intelligence programs and cross-cutting capabilities. He holds bachelor’s, master’s and doctoral degrees in aeronautics and astronautics from MIT.